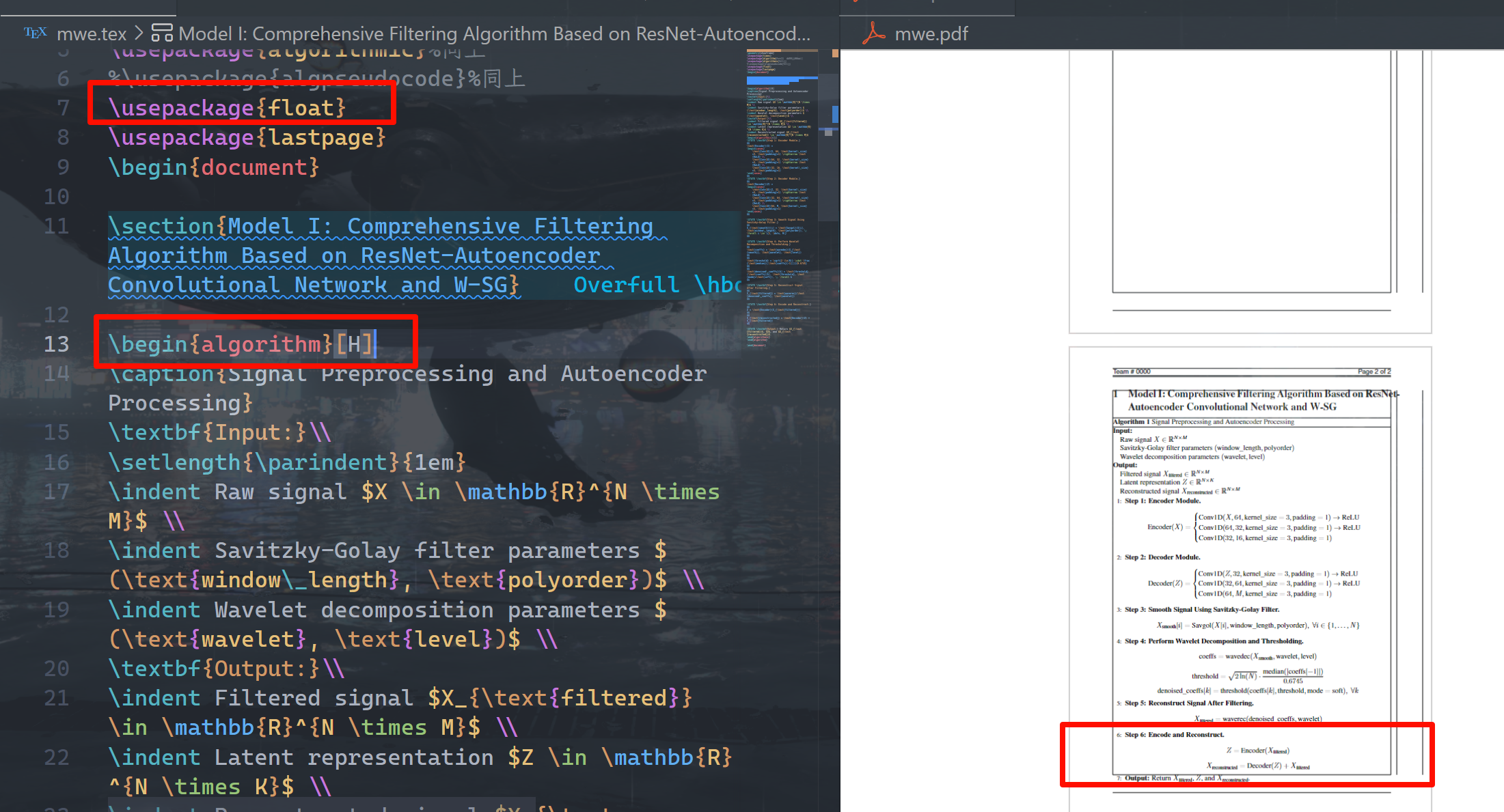

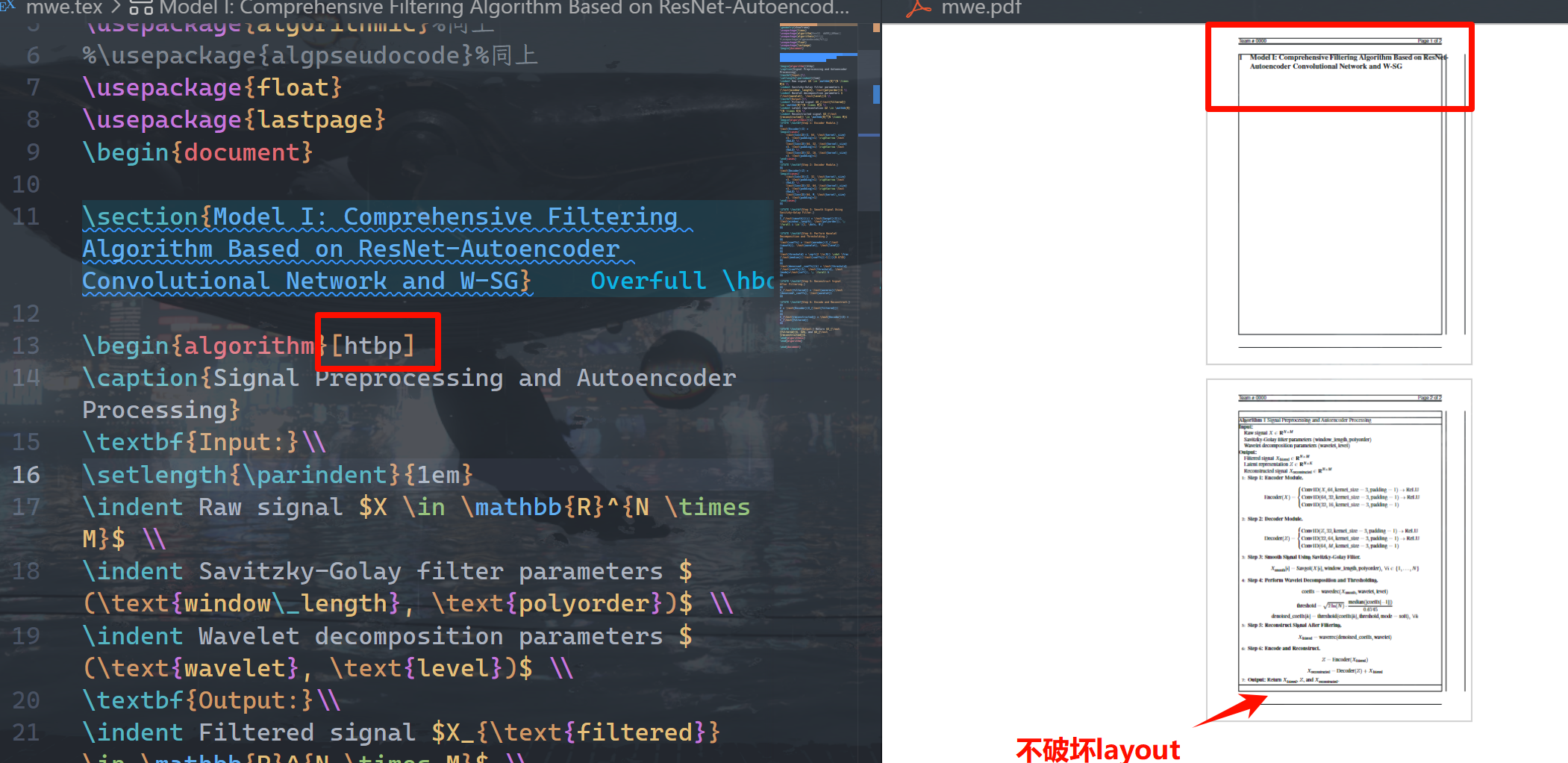

为什么插入算法前边出现一页空白?

插入算法出现空白页,删除算法后回复正常

\documentclass{mcmthesis}

%\setlength{\parskip}{0.1em}

%\documentclass[CTeX = true]{mcmthesis} % 当使用 CTeX 套装时请注释上一行使用该行的设置

\mcmsetup{tstyle=\color{black}\bfseries,%修改题号,队号的颜色和加粗显示,黑色可以修改为 black

tcn = H034, problem = B, %修改队号,参赛题号

sheet = true, titleinsheet = true, keywordsinsheet = true,%修改sheet显示信息

titlepage = false, abstract = true}

%四款字体可以选择

\usepackage{comment}

\usepackage{times}

%\usepackage{newtxtext,newtxmath} %CTeX 无此字体,可用 txfonts 替代,请使用新版 TeXLive.

%\usepackage{palatino}

%\usepackage{txfonts}

\usepackage{hyperref} % 引入超链接包

\usepackage{algorithm}%自己加的写伪代码用

\usepackage{algorithmic}%同上

%\usepackage{algpseudocode}%同上

\usepackage{graphicx}

\usepackage{subcaption}%画图用

\usepackage{indentfirst} %首行缩进,注释掉,首行就不再缩进。

\usepackage{lipsum}

\usepackage{enumitem}

\title{\textbf{Mastering the Magnetic Realm: Unveiling Earth's Secrets through Multi-Sensor Synergy}}

\author{\small \href{https://www.latexstudio.net/}

{\includegraphics[width=7cm]{mcmthesis-logo}}}

\date{\today}

\begin{document}

In order to better describe the model, we assign the following symbols meanings:

\begin{table}[H] % 强制表格固定在当前位置

\caption{Notations Table} % 插入图表标题

\begin{center}

\centering % 表格居中

\begin{tabularx}{\linewidth}{lX} % 使用 \linewidth 限制表格宽度

\toprule[1.5pt]

\textbf{Notations} & \textbf{Explanation} \\

\midrule[1pt]

$\mathcal{L}$ & Loss function: difference between predicted and actual values. \\

$N$ & Total number of time points or samples. \\

$Y_{1}(t), (i=1)$ & Observed value at time $t$ for Sensor 1. \\

$\hat{Y}_{1}(t), (i=1)$ & Predicted value at time $t$ for Sensor 1. \\

$A_{i}(t), (i=1, 2, 3, \dots)$ & Additional signal of Sensor $i$ at time $t$. \\

$Y_{i}(t), (i=1, 2, 3, \dots)$ & Actual measurement from Sensor $i$. \\

$\hat{Y}_{i}(t), (i=1, 2, 3, \dots)$ & Predicted measurement for Sensor $i$. \\

$N_{\text{time}}$ & Total number of time intervals. \\

$t_{0,j}$ & Start time of the $j$-th interval. \\

$t_{0,j}+24$ & End time of the $j$-th interval. \\

$\Delta M_{x,i}(t), \Delta M_{y,i}(t), \Delta M_{z,i}(t)$ & Change in geomagnetic field for Sensor $i$. \\

$B_{1}, B_{2}, \dots, B_{6}$ & Symbols for different concepts. \\

$G_1, G_2, G_3$ & Components of the objective function. \\

$B_3, B_4, B_5, B_6$ & Objective functions in Models II and III. \\

$\text{MSE}$ & Mean Squared Error: evaluates filtering performance. \\

$\text{SNR}$ & Signal-to-Noise Ratio: measures signal quality. \\

$\hat{x}(t)$ & Reconstructed signal: denoised version of $f(t)$. \\

$x(t)$ & Raw signal with potential noise. \\

$T$ & Noise threshold in wavelet transformation. \\

$x_1(t)$ & Geomagnetic signal recorded by Sensor 1. \\

$\hat{x}_i(t)$ & Signals from other sensors. \\

$y(t)$ & Magnetic signal from the target object. \\

\bottomrule[1.5pt]

\end{tabularx}

\label{tab:notations} % 设置标签以便引用

\end{center}

\end{table}

\vspace{-10pt} % 减少与后续内容的垂直间距

% 清空浮动队列,确保表格后紧跟正文

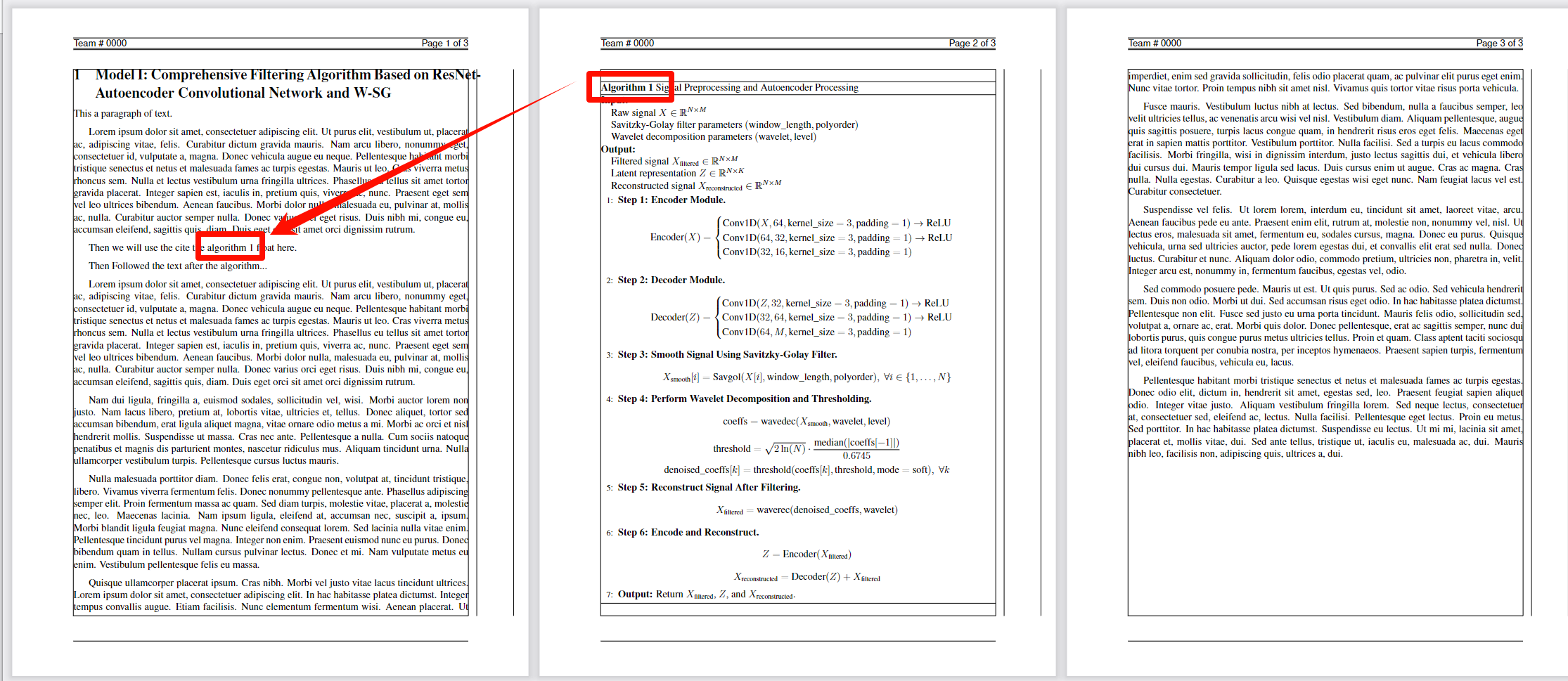

\section{Model I: Comprehensive Filtering Algorithm Based on ResNet-Autoencoder Convolutional Network and W-SG}

\begin{algorithm}[H]

\caption{Signal Preprocessing and Autoencoder Processing}

\textbf{Input:}\\

\setlength{\parindent}{1em}

\indent Raw signal $X \in \mathbb{R}^{N \times M}$ \\

\indent Savitzky-Golay filter parameters $(\text{window\_length}, \text{polyorder})$ \\

\indent Wavelet decomposition parameters $(\text{wavelet}, \text{level})$ \\

\textbf{Output:}\\

\indent Filtered signal $X_{\text{filtered}} \in \mathbb{R}^{N \times M}$ \\

\indent Latent representation $Z \in \mathbb{R}^{N \times K}$ \\

\indent Reconstructed signal $X_{\text{reconstructed}} \in \mathbb{R}^{N \times M}$ \\

\begin{algorithmic}[1]

\STATE \textbf{Step 1: Encoder Module.}

$$

\text{Encoder}(X) =

\begin{cases}

\text{Conv1D}(X, 64, \text{kernel\_size}=3, \text{padding}=1) \rightarrow \text{ReLU} \\

\text{Conv1D}(64, 32, \text{kernel\_size}=3, \text{padding}=1) \rightarrow \text{ReLU} \\

\text{Conv1D}(32, 16, \text{kernel\_size}=3, \text{padding}=1)

\end{cases}

$$

\STATE \textbf{Step 2: Decoder Module.}

$$

\text{Decoder}(Z) =

\begin{cases}

\text{Conv1D}(Z, 32, \text{kernel\_size}=3, \text{padding}=1) \rightarrow \text{ReLU} \\

\text{Conv1D}(32, 64, \text{kernel\_size}=3, \text{padding}=1) \rightarrow \text{ReLU} \\

\text{Conv1D}(64, M, \text{kernel\_size}=3, \text{padding}=1)

\end{cases}

$$

\STATE \textbf{Step 3: Smooth Signal Using Savitzky-Golay Filter.}

$$

X_{\text{smooth}}[i] = \text{Savgol}(X[i], \text{window\_length}, \text{polyorder}), \; \forall i \in \{1, \dots, N\}

$$

\STATE \textbf{Step 4: Perform Wavelet Decomposition and Thresholding.}

$$

\text{coeffs} = \text{wavedec}(X_{\text{smooth}}, \text{wavelet}, \text{level})

$$

$$

\text{threshold} = \sqrt{2 \ln(N)} \cdot \frac{\text{median}(|\text{coeffs}[-1]|)}{0.6745}

$$

$$

\text{denoised\_coeffs}[k] = \text{threshold}(\text{coeffs}[k], \text{threshold}, \text{mode}=\text{soft}), \; \forall k

$$

\STATE \textbf{Step 5: Reconstruct Signal After Filtering.}

$$

X_{\text{filtered}} = \text{waverec}(\text{denoised\_coeffs}, \text{wavelet})

$$

\STATE \textbf{Step 6: Encode and Reconstruct.}

$$

Z = \text{Encoder}(X_{\text{filtered}})

$$

$$

X_{\text{reconstructed}} = \text{Decoder}(Z) + X_{\text{filtered}}

$$

\STATE \textbf{Output:} Return $X_{\text{filtered}}$, $Z$, and $X_{\text{reconstructed}}$.

\end{algorithmic}

\end{algorithm}